Text-to-image (T2I) models have recently gained widespread adoption. This has spurred concerns about safeguarding intellectual property rights and an increasing demand for mechanisms that prevent the generation of specific artistic styles. Existing methods for style extraction typically necessitate the collection of custom datasets and the training of specialized models. This, however, is resource-intensive, time-consuming, and often impractical for real-time applications.

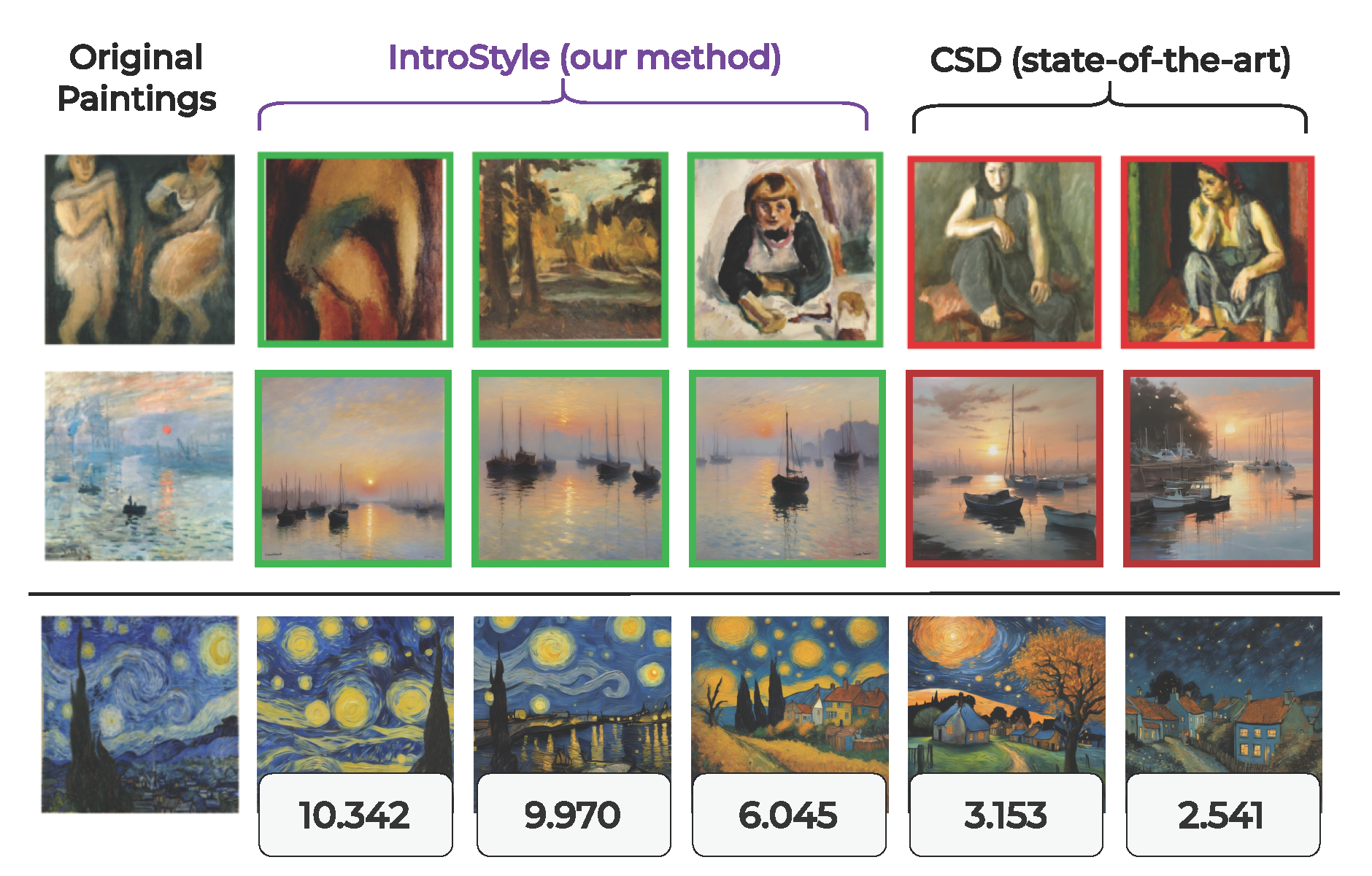

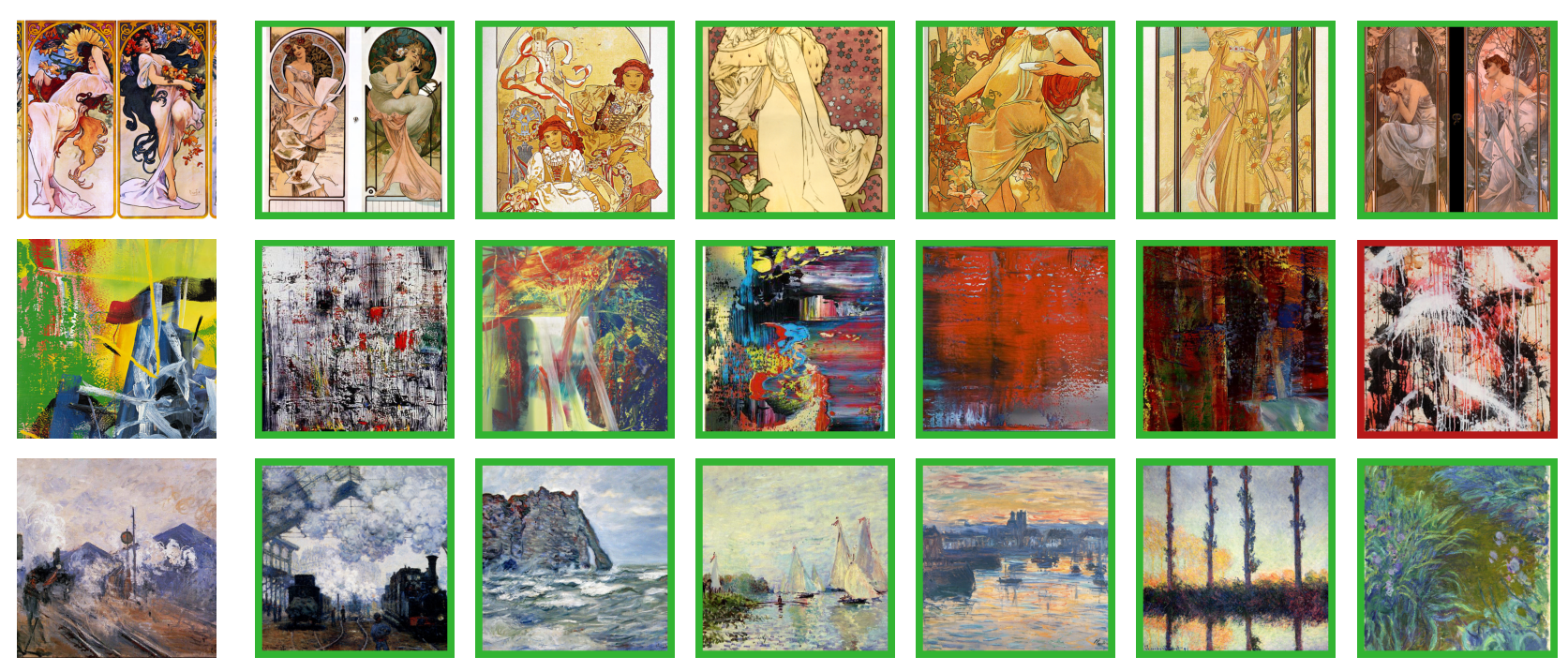

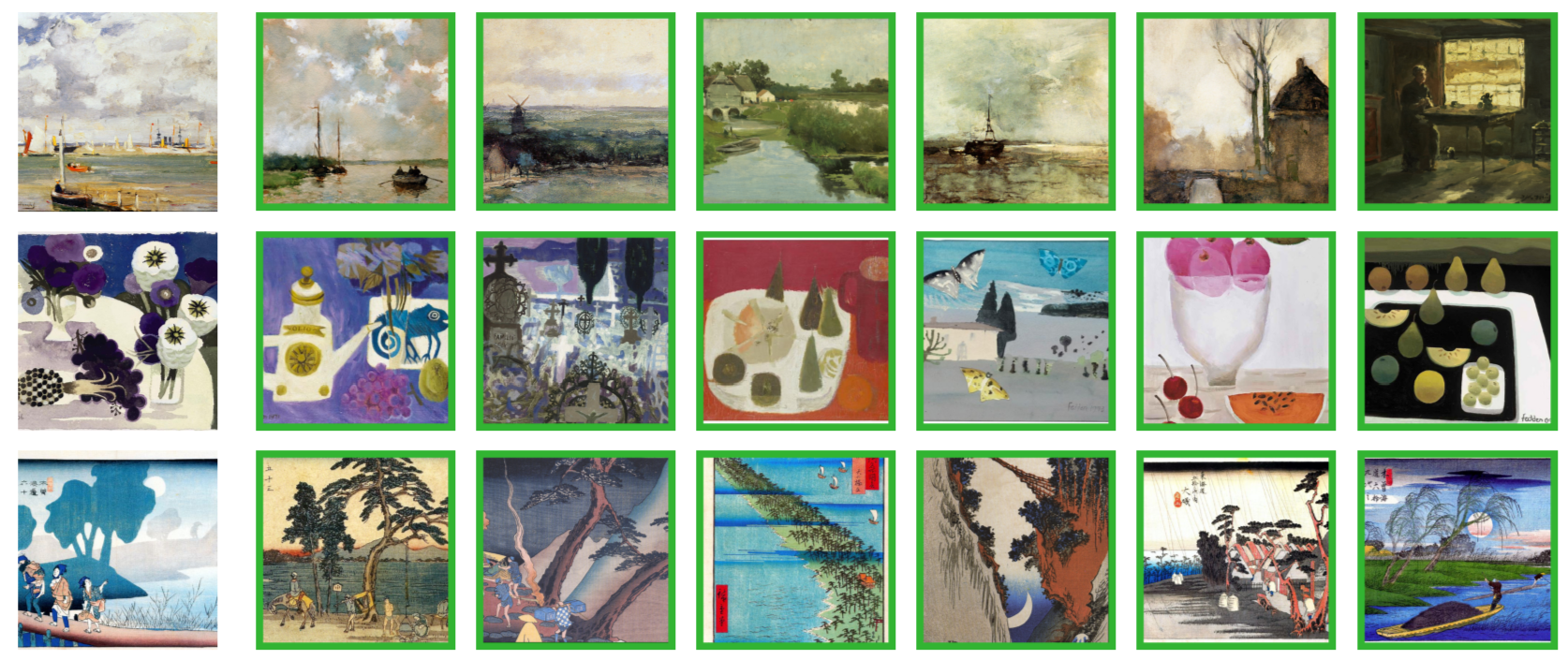

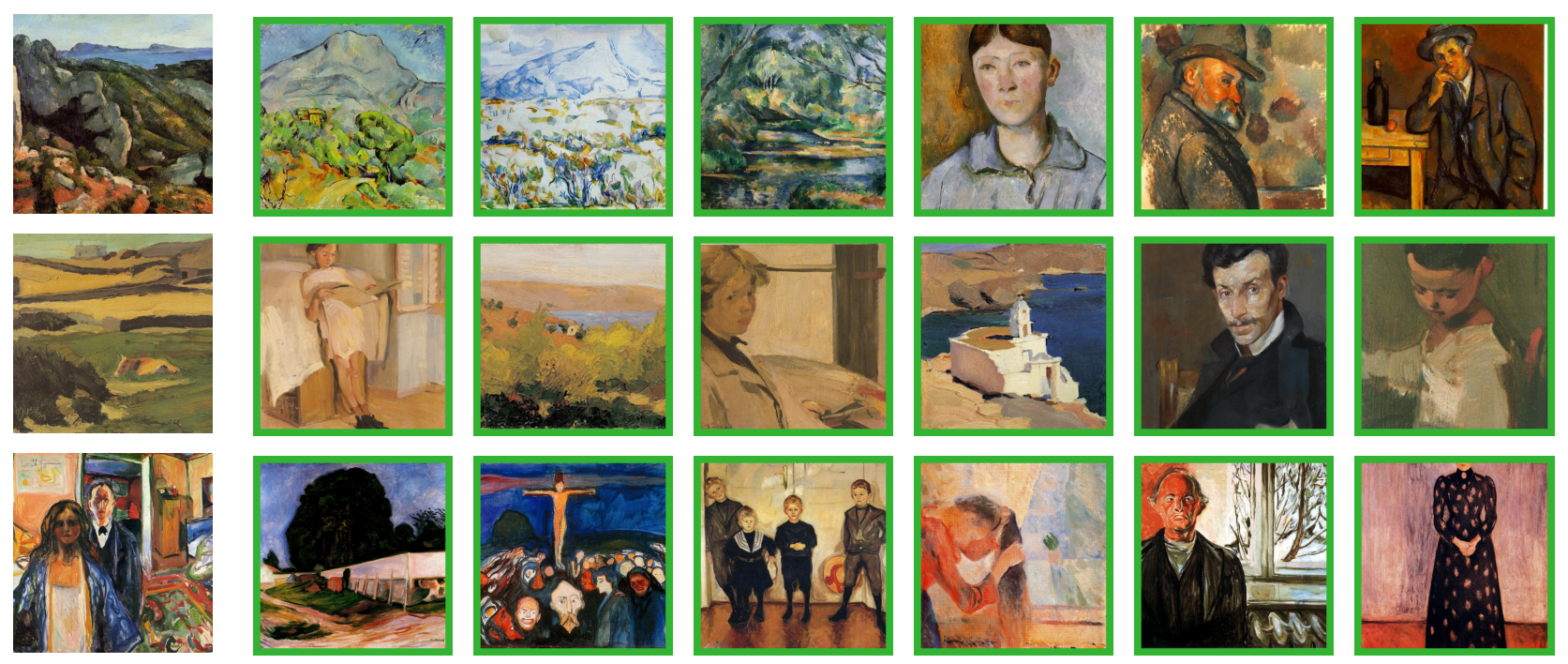

We present a novel, training-free framework to solve the style attribution problem, using the features produced by a diffusion model alone, without any external modules or retraining. This is denoted as Introspective Style attribution (IntroStyle) and is shown to have superior performance to state-of-the-art models for style attribution. We also introduce a synthetic dataset of Artistic Style Split (ArtSplit) to isolate artistic style and evaluate fine-grained style attribution performance. Our experimental results on WikiArt and DomainNet datasets show that IntroStyle is robust to the dynamic nature of artistic styles, outperforming existing methods by a wide margin.

IntroStyle is a method that helps AI understand and compare artistic styles of images. It does this by extracting special features (called "style features") from each image using a pre-trained diffusion model — a type of powerful image AI.

Here's a breakdown:

t. From that tensor, they calculate two things for each channel (kind of like a color or feature layer):

These numbers together form the IntroStyle feature representation for that image. It's like a fingerprint of the image's style.

To see how similar two styles are, they use a special metric called the 2-Wasserstein distance — which measures how different the style fingerprints are. The smaller the distance, the more similar the styles.

Despite being a simple method, IntroStyle works very well for tasks where the AI needs to identify or compare artistic styles between images.

Current datasets aren't good enough for testing how well an AI can recognize and match artistic styles (like the brushstroke or color technique of Van Gogh or Picasso). These datasets often mix up the content (what's shown) with the style (how it's shown), making it hard to evaluate style understanding properly.

We made a new dataset called ArtSplit. Here's how we built it:

This new dataset helps researchers clearly test how well AI understands style (independent of content), because the style and content are deliberately kept separate in the prompts.

@InProceedings{kumar2025introstyle,

author = {Kumar, Anand and Mu, Jiteng and Vasconcelos, Nuno},

title = {IntroStyle: Training-Free Introspective Style Attribution using Diffusion Features},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2025},

}